Causality provides a formal language for analyzing and understanding often subtle problems in machine learning, particularly it can formalize reasonable notions of distribution shift. At its core, causality is the combination of probability and the notion of intervention. Distribution shifts can be viewed as a type of unknown intervention. This project seeks to explore how causality can inspire and help to analyze core ML robustness problems.

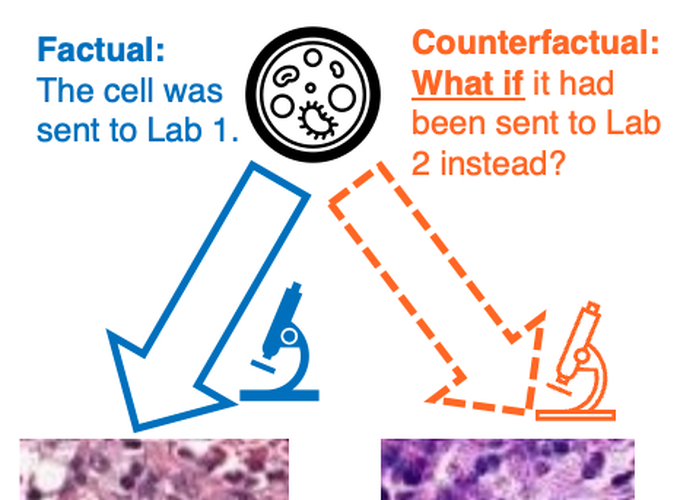

One of the recent directions is using domain counterfactuals, counterfactuals between two domains that answer: “What would this sample have been like if it had been observed in the other domain or environment?” Our work has shown applications of domain counterfactuals for distribution shift explanations, counterfactual fairness, and out-of-distribution robustness. We have also worked on estimating counterfactuals given only data from the domains by leveraging a sparsity of intervention hypothesis.