While modern AI performs well in certain and closed-world contexts, these AI systems may fail in uncertain and open-world contexts. For example, individual sensors of an AI system may be noisy or intermittently fail causing incorrect or missing values. Beyond uncertainty, the open-world nature of real-world contexts means that there are complex changes in the operating environment. Some environment changes are exogenous to the system such as a change in foreign policy or a world event, while others directly affect the inputs to the system such as a change in weather, location, or sensor network. Given these deficiencies in modern AI, human oversight is required for practical systems. To alleviate the problems above, a contextual AI system should be able to explain its uncertainty and changes in its environment.

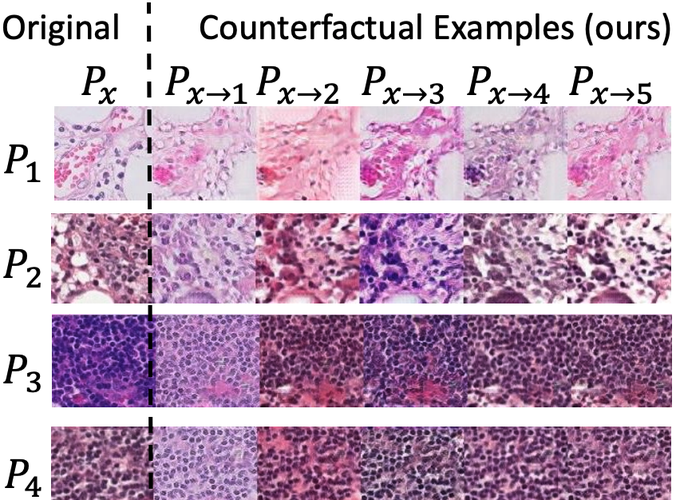

This research area aims to fill these gaps in contextual AI by (1) providing a self-explainable neural networks, (2) combining efficient input and parameter uncertainty methods into a unified uncertainty framework and (3) developing a general method for explaining distributions shifts between environments. Specifically, we incorporate Shapley feature attribution values [Lundberg & Lee, 2017] as latent representations in deep models thereby making Shapley explanations first-class citizens in the modeling paradigm [Wang et al., 2021]. We study the problem of estimating uncertainty due to missing values. We explain distribution shifts via transport maps between two distributions [Kulinski & Inouye, 2022a, 2022b]. We have also explored the connection of distribution matching to invariance and causal inference queries so that we may enable causally-inspired explanations via domain counterfactuals [Zhou et al., 2023]. Access to causal structure is expected to unlock both counterfactual explanations and simpler explanations.

References

Khosravi, P., Choi, Y., Liang, Y., Vergari, A., & Van den Broeck, G. (2019). On tractable computation of expected predictions. In Neural Information Processing Systems (NeurIPS), 32.

Kulinski, S., & Inouye, D. I. (2022). Towards Explaining Image-Based Distribution Shifts. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops.

Kulinski, S., & Inouye, D. I. (2022). Towards Explaining Distribution Shifts. arXiv preprint arXiv:2210.10275.

Lundberg, S. M., & Lee, S. I. (2017). A unified approach to interpreting model predictions. In Neural Information Processing Systems (NeurIPS), 30.

Wang, R., Wang, X., & Inouye, D. (2021). Shapley Explanation Networks. In International Conference on Learning Representations (ICLR).

Zhou, Z., Bai, R., Kulinski, S., Kocaoglu, M., & Inouye, D. Towards Characterizing Domain Counterfactuals For Invertible Latent Causal Models. arXiv preprint arXiv:2306.11281