This project aims to improve robustness including out-of-distribution (OOD) robustness (e.g., domain generalization and domain adaptation) and fairness, which can be viewed as a type of robustness to sensitive attribute changes. The core problem is distribution shift, i.e., when the test distribution is different from the training distribution. We leverage various tools including causality and distribution matching.

Conditional distribution alignment

Robust ML

Conditional distribution alignment

Conditional distribution alignment

Robust ML

Publications

(New!) Your VAR Model is Secretly an Efficient and Explainable Generative Classifier

Generative classifiers, which leverage conditional generative models for classification, have recently demonstrated desirable …

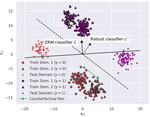

From Invariant Representations to Invariant Data: Provable Robustness to Spurious Correlations via Noisy Counterfactual Matching

Spurious correlations can cause model performance to degrade in new environments. Prior causality-inspired work aim to learn invariant …

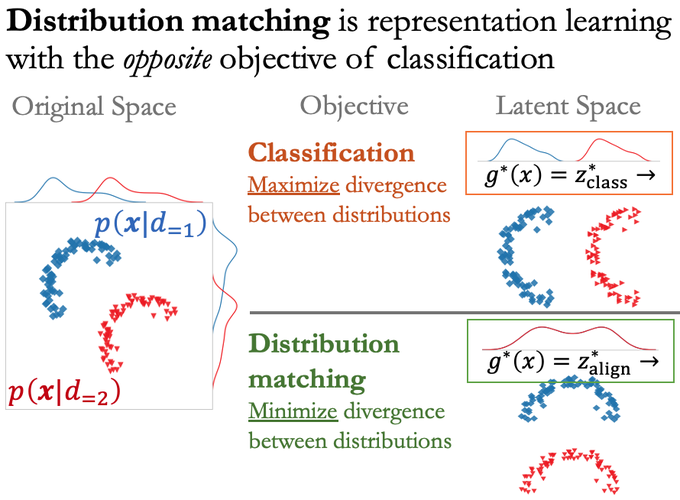

Expressive Score-Based Priors for Distribution Matching with Geometry-Preserving Regularization

Distribution matching (DM) is a versatile domain-invariant representation learning technique that has been applied to tasks such as …

Vertical Validation: Evaluating Implicit Generative Models for Graphs on Thin Support Regions

There has been a growing excitement that implicit graph generative models could be used to design or discover new molecules for …

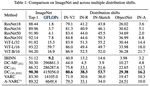

Benchmarking Algorithms for Federated Domain Generalization

While prior federated learning (FL) methods mainly consider client heterogeneity, we focus on the Federated Domain Generalization (DG) …

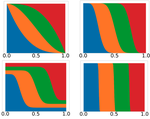

Towards Practical Non-Adversarial Distribution Matching

Distribution matching can be used to learn invariant representations with applications in fairness and robustness. Most prior works …

Efficient Federated Domain Translation

A central theme in federated learning (FL) is the fact that client data distributions are often not independent and identically …

Cooperative Distribution Alignment via JSD Upper Bound

Unsupervised distribution alignment estimates a transformation that maps two or more source distributions to a shared aligned …

Iterative Alignment Flows

The unsupervised task of aligning two or more distributions in a shared latent space has many applications including fair …

Feature Shift Detection: Localizing Which Features Have Shifted via Conditional Distribution Tests

While previous distribution shift detection approaches can identify if a shift has occurred, these approaches cannot localize which …